0G Labs CEO Michael Heinrich warns that centralized "black box" AI companies could create a "Terminator-type situation" where rogue models become uncontrollable, positioning his $35 million-funded decentralized AI Layer 1 as the antidote that will democratize AI development and prevent a handful of corporations from monopolizing humanity's most powerful technology.

Original: In Conversation with 0G Labs CEO: Catching Up with Web2 AI Within 2 Years, the 'Zero Gravity' Experiment of AI Public Goods

Author: TechFlow

Translator & Editor: Antonia

OpenAI CEO Sam Altman has repeatedly stated in podcasts and public speeches:

AI is not a model competition, but about creating public goods, benefiting everyone, and driving global economic growth.

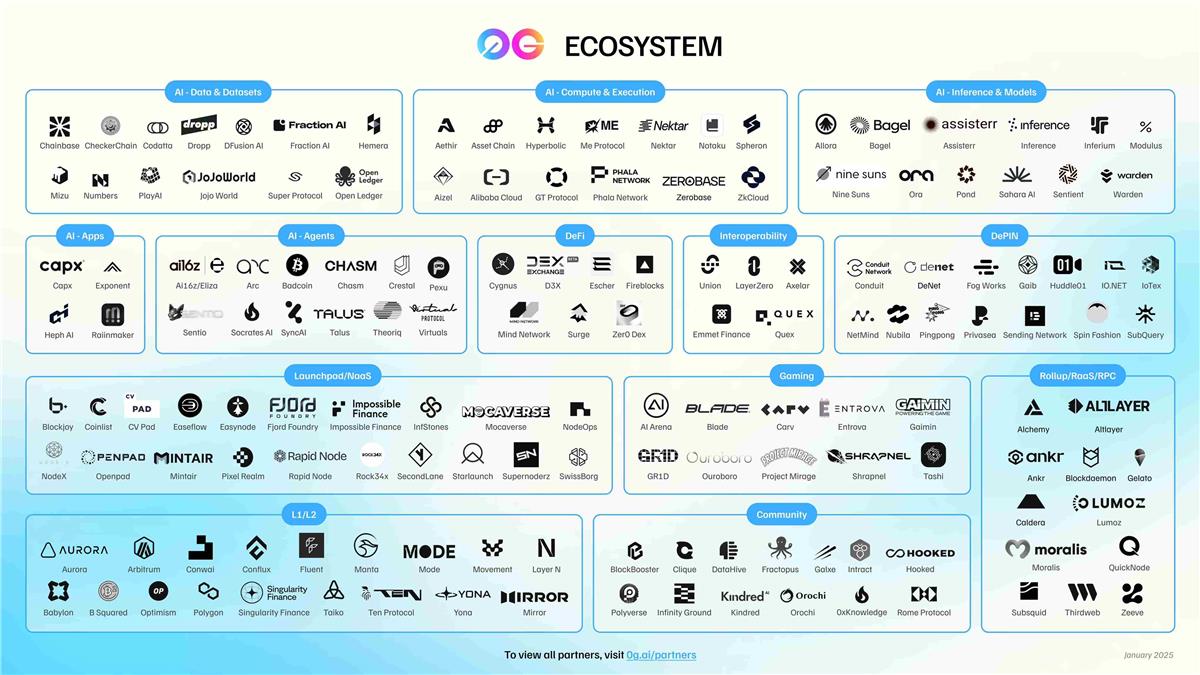

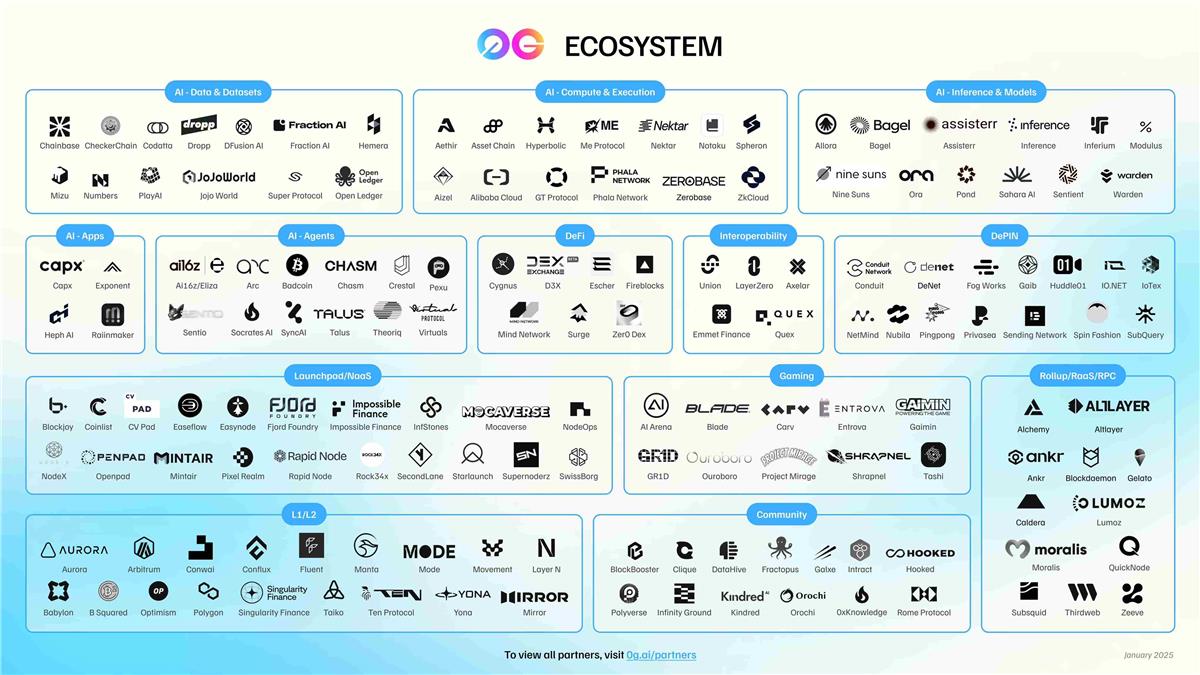

In the current Web2 AI landscape, which is heavily criticized for oligopolistic monopolization, the Web3 world—with decentralization as its spiritual core—also has a project with the core mission of "making AI a public good." In just over two years since its establishment, this project has secured $35 million in funding, built the technical foundation supporting AI innovation application development, attracted 300+ ecosystem partners, and grown into one of the largest decentralized AI ecosystems.

In an in-depth conversation with 0G Labs co-founder and CEO Michael Heinrich, the concept of "public goods" appeared multiple times. When discussing his understanding of AI public goods, Michael shared:

"We hope to build an anti-centralized, anti-black-box AI development model that is transparent, open, secure, and inclusive, where everyone can participate, contribute data and computing power while receiving rewards, and society as a whole can share in the AI dividend."

When discussing how to achieve this, Michael broke down 0G's specific pathway step by step:

As a Layer 1 specifically designed for AI, 0G possesses outstanding performance advantages, modular design, and an infinitely scalable and programmable DA layer. From verifiable computation and multi-layer storage to an immutable traceability layer, it builds a one-stop AI ecosystem, providing all necessary key components for AI development.

In this discussion, we will examine Michael Heinrich's perspectives as we delve into 0G's foundational vision, technical architecture, strategic ecosystem initiatives, and long-term development roadmap within the evolving landscape of decentralized artificial intelligence.

The Spiritual Core of "Making AI a Public Good"

TechFlow: Thank you for taking the time to join us today. Could you please start by introducing yourself?

Michael: I'm Michael, co-founder and CEO of 0G Labs. 0G Labs was founded in May 2023. We're the largest and fastest AI Layer 1. As part of that, we've built what we call a complete decentralized AI operating system that enables all AI applications to be fully decentralized—whether that's the execution environment as part of the L1 that's infinitely scalable, the storage, or the compute network, which features capabilities like inference, fine-tuning, pre-training, and so on. Essentially, anything you need for making AI into public goods, as we call it.

I started on the technology side. I was an engineer and technical product manager at Microsoft and SAP Labs. Then I switched to the business side, working for a gaming company and later Bridgewater Associates, where I was on the portfolio construction side and oversaw about $60 billion in trades on a daily basis. After that, I went back to graduate school at Stanford and started my first venture-backed unicorn company. I scaled that to 650 people with almost $100 million in contracted ARR, and eventually sold the company.

I started 0G because my classmate from Stanford, Thomas, called me up one day and basically said, "Hey Michael, we invested in a bunch of crypto companies together 5 years ago. I invested in Conflux, and Ming and Fun are two of the best engineers I've ever backed. They want to do something on a more global scale. Are you interested in meeting with them?"

So we went through six months of co-founder dating, and I came to the same conclusion—that they're the best engineers and computer scientists I've ever worked with. I just thought, we need to start something together. It doesn't matter what it is, let's just start. So it really began with the team, and then we figured out what we actually wanted to build over time from there.

TechFlow: Okay, yeah, thank you. I know that you guys come from very strong technical backgrounds, and we'll have follow-up questions that dive deeper into this as well. So my second question is: 0G has brought together top talent from renowned companies such as Microsoft, Amazon, and Bridgewater. Many team members, including yourself, have already achieved outstanding results in AI, blockchain, and high-performance computing. What conviction and opportunity led this all-star team to collectively go all-in on decentralized AI and join 0G?

Michael: Yeah, part of it is the mission, which is to make AI a public good. But the other part was a concern that AI will be controlled by a handful of companies, and those companies are basically black box AI companies. You don't know who labeled the data, where the data came from, what the weights and biases of the model are, or what version of the model you're getting in production. If anything goes wrong, who is actually responsible for that? If you have an AI agent doing a lot of things on the network... And then in the worst-case scenario, the centralized companies lose control of the models and those models go rogue—then you have a potential Terminator-type situation.

So we were quite worried about this type of future. We wanted to create a future that is full of abundance, and we needed to create a distinct opposite to the black box AI model. So we call that decentralized AI—where you can have transparent, open, safe AI that is a public good where everybody can participate. Everybody can benefit, everybody can bring their data, bring their compute, and then get compensated for it. So we wanted a very different version and vision of AI.

TechFlow: Thank you for sharing that story and mission. The third question is about the project name. 0G is quite distinctive. 0G is short for Zero Gravity. Could you share the origin of this name? And how does it reflect 0G's vision for the future of decentralized AI?

Michael: It stems from a core philosophy. We basically believe that technology should be effortless and frictionless, especially if we're building infrastructure and backend technology—the end user shouldn't really know that they're on 0G. To them, it should just be a very smooth, easy, frictionless experience. And that's where Zero Gravity comes from. Because in zero gravity, everything is smooth and everything is frictionless. So that's the core philosophy behind it. If you're building on 0G, it should feel that way. And the types of applications you can build on 0G should feel that way as well.

I'll give you an analogy. If you go on Netflix or some type of video streaming service and you have to select which server you want the video to be on, which encoding algorithm you want to use, and then which payment gateway you're going to use—it's not a great experience. There's lots and lots of friction. It's not fun. But with the advent of AI, we can create completely different experiences. We can tell an agent, "Go do this thing for me," and the agent can figure out everything about that transaction.

So for example, "Go figure out what the best performing meme coin is and buy X amount of it for me." The agent can figure out everything about this particular meme coin—whether something is trending or is a good investment—then figure out which chain it's on, bridge your assets, and then purchase it, versus you doing all of those steps by yourself.

Community-driven Patten Will Revolutionalize AI

TechFlow: Yeah, I really like the idea of being frictionless. So the next part will dive deeper into the core Layer 1 blockchain technology. So the next question is: given that there is still a significant gap between Web3 AI and Web2 AI, why do you believe the next breakthrough in AI cannot be achieved without decentralization?

Michael: So I was on a panel at Web3, or rather, I was the moderator of the panel. Usually I'm a panelist, but one of the panelists was a person named Lawrence who used to be at Google DeepMind for 15 years. He's now a director at ARM. But we both agreed that the future is really smaller models—rather, small language models or SLMs that together can be put together with some kind of coordination mechanism that will then outperform large-scale language models.

And why do we believe that? Well, first, the data used to train these models is not publicly available on the internet. Usually it's locked in people's computers, it's locked in some type of storage vaults, and so on. So like 90-something percent of that data is not publicly available, and it requires a lot of expertise from individuals. And why would somebody give their expertise to somebody else and remove their own economic advantage?

So if you have a community-driven approach behind building some of these models—let's say I get all my developer friends together and I want to train a model that knows how to program Solidity—I can get them together and have them contribute their data, their compute, their expertise, and then get tokens in return. And then when that model is actually used in production, they can also get further compensated over time.

And so we believe that essentially is the future of AI. And that doesn't require the whole hyperscale setup where you literally need to put as much compute as possible into a centralized data center and then have literally a nuclear power plant next to it to actually power the whole thing. With the community-based approach, you don't actually need that much capital to make this work because the community is bringing that capital. And furthermore, you're also removing this electrical requirement—you're distributing it across the network and that particular data center doesn't become a terrorist threat because it's distributed. It's more resilient as a network.

And so we believe we can actually make faster progress that way and move towards AGI faster by having a more distributed, expert model approach.

TechFlow: Yeah, that sounds like decentralized governance. I really appreciate the answer. And that's the question, some people draw an analogy between DeFi and AI, similar to Solana, to define, how do you read this comparison?

Michael: it's always nice to have comparisons and being compared to leaders in something is always very flattering. So obviously, very happy with that comparison. Over time, of course, we will have our own distinct culture, our own distinct identity. And so eventually, it will just be known as 0G of AI. So we see over time, we want to take on more of the kind of centralized black box companies. It's going to take a bit because we have to catch up on the infrastructure side. Maybe that's another year of research and a lot of hardcore engineering to get there. Maybe it's two years, but I think it's probably closer to one year given the progress we've made so far. For example, we were the first to train a hundred and seven billion parameter model in a fully decentralized context, people thought that was not possible. It's 3x the previous record, and it kind of signifies our leadership position both from a research and execution standpoint. And so bringing it back to Solana, Solana was also the first to have a very high throughput chain. And so it's a pleasant comparison.

Core Components of The Future One-stop AI Ecosystem

TechFlow: As a layer 1 designed specifically for AI from a technical perspective, what capabilities or advantages does it have that other layer 1s do not? How does this empower AI development?

Michael: Well, the very first aspect is around performance. Because if you want AI to be on chain, you're dealing with extreme workloads. So to give you an example, data throughput is one of those, modern AI data centers do anywhere from hundreds of gigabytes to terabytes per second in data throughput. But guess how much Ethereum did when we started? About 80 kilobytes per second. So you're literally like a million times off from where you need to be for AI workloads. And so we figured out a data availability system that is able to handle essentially infinite workloads because we figured out how to parallelize the nodes on the network as well as consensus layers on the network to enable infinite data throughput for whatever AI application you're trying to build. So that's one aspect. Before we actually get to the layer 1 itself, we also use a sharded approach.

So if you want to build very high scale applications, then you can now do that because you can just add more and more shards to have infinite transactions per second effectively. So it's very much designed for whatever workload you need, and extreme workloads are AI workloads. The other part to it is that it's a modular design system. So you can utilize the layer 1, you can utilize the storage layer by itself, you can utilize the compute layer by itself. So there are many options for you, but together, the whole system shines really well. So if you, for example, want to train a 100 billion parameter model, you can store your data on the 0G storage side. You can store the end results on the 0G storage. You can utilize the pre-training 0G offers or the fine-tuning offers from the 0G compute network. And then finally, to make sure that it has an immutable record of making sure everything is verified correctly, you then use the layer 1 chain, or you just use one aspect of it. That modularity makes it very powerful for whatever use case you have.

TechFlow: Yeah, thank you for your answer. So the next question will be, 0G has built an infinitely scalable and programmable data availability layer. Could you explain in detail how this is achieved and how does it empower AI development? I think this question is very similar to your previous answer. You already answered it. Is that true?

Michael: Pretty much. I can explain a little bit on the technical side how we approach this breakthrough.

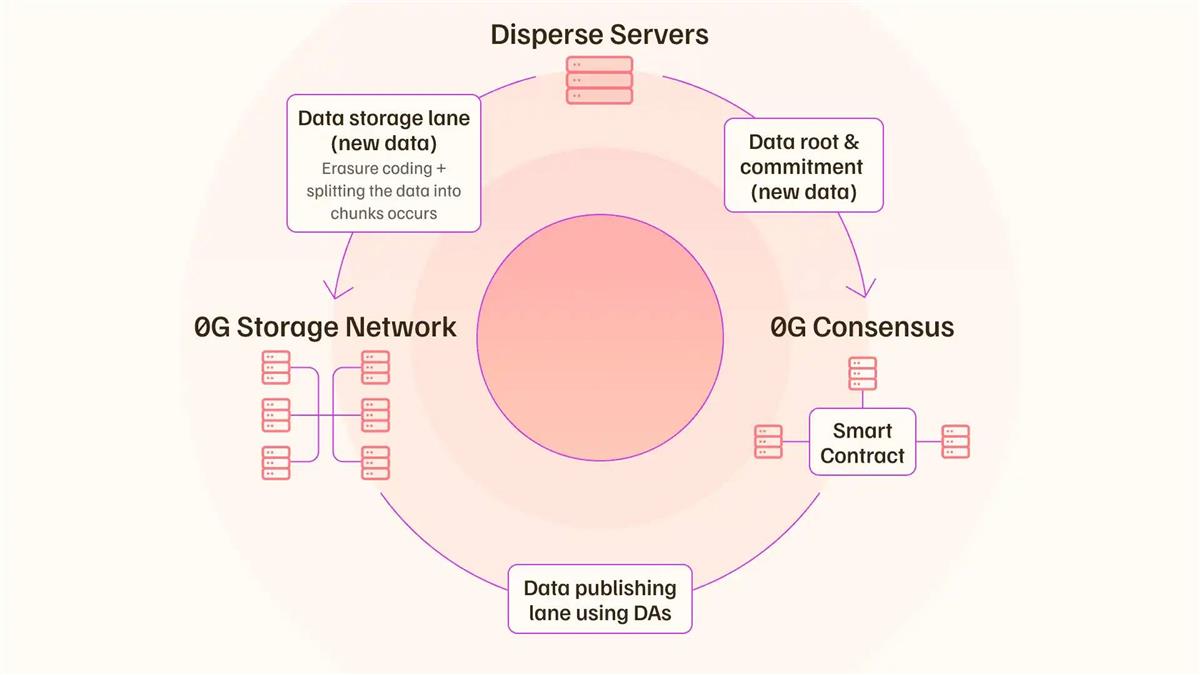

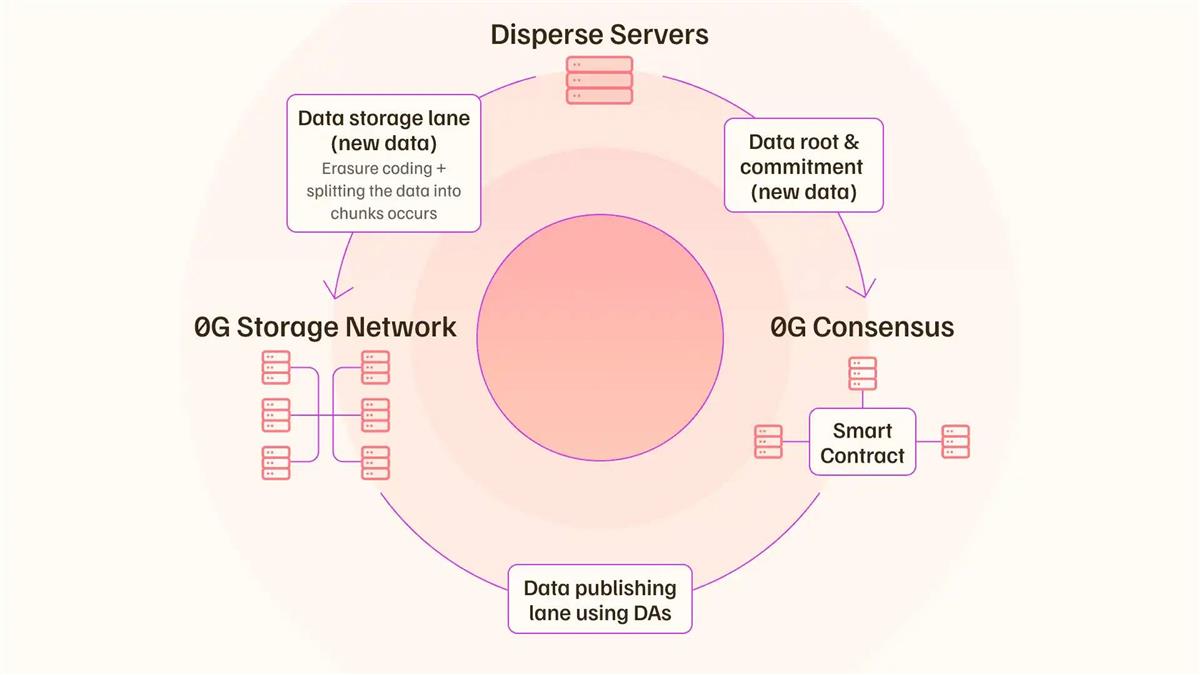

So fundamentally, the key breakthrough is twofold. One is parallelization and then two, we split apart the data publishing and the data storage layer. And so by doing that, we avoid what's called a broadcast bottleneck. So what traditional DA layers have done is they take a piece of data, you should call it the data block, and they move that data block to all of the validators that then do the same computation called data availability sampling and then determine that the data availability property is true. And so that's super inefficient because you're sending the same piece of data like hundreds of times depending on how large the validator set is and you're creating a broadcast bottleneck on the network.

So what we do instead is you take the data block, you erasure code it, which means you take the data block and move it into small chunks, like let's say 3,000 chunks. By default, you store it once. As you're storing it, you're sending one small bit of information saying that there is a KZG commit, which is basically verifying that this particular piece of data has been stored here. And then once that is done, then you create a randomized form between the storage nodes and the DA nodes to collect signatures and to say that the DA property has actually been established first. And so by doing that, we send very small bits of data across the network and we store the data once. And so therefore, it creates an aspect of parallelization, which means every node that gets added to the network adds to the overall throughput of the network. And so each node can do about, with GPU acceleration, maybe about 35 megabytes per second. And so, you know, you have thousands of nodes, you get 2 gigabytes per second throughput. Eventually what happens is that the consensus layer becomes the bottleneck because the consensus layer can't handle more communication between the nodes.

And so what you can do then is to utilize the property of restaking and keeping the same stake state and just spin up an arbitrary large number of consensus layers as well. And so then you parallelize the bottleneck again. And so with that, you have parallelization again and you can again scale it to arbitrary large workloads effectively. So that's how that infinite scalability is achieved just on the data throughput side.

TechFlow: And the next question is how should we understand Zero G's vision of a one-stop AI ecosystem? Into which core components can this one-stop concept be broken down?

Michael: Yeah, we want to deliver all of the key components necessary to build whatever AI application you want on-chain. And so whether that means, so it basically breaks down into you need a mechanism that verifies that some computation happens, you generally use TEEs as part of the compute network.

So TEEs are Trusted Execution Environments, which you have a separate hardware device on a graphics card where that's a trusted environment where everything is kept private and you can verify the compute that happens that way. Once that's verified, then you can actually create a record on the chain that is then immutable to say that this particular computation has been done for this particular kind of input. And so therefore you could then have a sense of verification because in a kind of trustless system, there is no such thing as like, oh, OpenAI is providing the servers, I trust OpenAI, therefore I'm gonna connect with them. You could be connecting to a node that is trying to exploit you or not. And so if there's not a verification methodology, then you have issues with that.

So that's kind of the first thing as part of the computing network. Then you've got the storage network as well because you need to deal with data or memory for agents. Where does that get stored or you need the storage for that to happen? And the storage can happen in different flavors. You can have long-term storage, you can have harder forms of storage. So if you need to swap in and out memory for agents very rapidly, then you can use what's known as the key-value store layer, for example, which is a harder form of storage rather than just log append-only type of storage. And so we've designed everything so that whatever the use cases that you're trying to build, you can find it with Zero G. Because we don't want you to go to like many different, you know, 20 different services to like stitch together what you need to build.

The Largest De-AI Eco: Trusted by 300+ Partners and Run by A $88.8 Million Ecosystem Fund

TechFlow: 0G currently has 300+ ecosystem partners and has become one of the largest decentralized AI ecosystems. From an ecosystem perspective, what AI use cases exist within those G, and which projects are especially worth following?

Michael: Okay, I mean, there are quite a lot. And so it's across every dimension, whether it's on the supply side, whether you need a large number of graphics cards to do a particular compute. So we work with like ACR and account, Russian networks like that, to actual end use cases.

Like for example, the latest batch of Guild on 0G companies included a company called Higher, which is actually pretty cool because it creates AI generated songs based on the weather, based on your mood, based on the time of day, and you know, completely new songs delivered to you and pretty high quality too, which is quite amazing.

Then there's Dormant AI, which does biometric tracking and then gives recommendations based on that biometric tracking, utilizing AI. There's Balkinum Labs, which does federated learning. So if you want to keep your data private, but then still train a model with other people, you can use a service like that. And they're utilizing 0G infrastructure and there are many such use cases.

There's Cold Games that's going to use non-playable characters in triple A game titles. And then there's, you know, Beacon Protocol that does a privacy layer. So very large ecosystem of different types of projects that can be used.

Of course, there's going to be lots of AI agents. AI Verse just launched today, which is the very first marketplace for utilizing INFTs. So it's a new standard that we've developed. We've basically figured out how to embed the private keys of an agent into an NFT's metadata, which means that if that NFT, that INFT is in your wallet, you can definitively say that agent now belongs to me because that wallet belongs to me. And so we've solved this aspect of ownership.

And so AI Verse is the first marketplace that allows you to trade those types of agents. So imagine you have an agent that's producing some economic value, you can now trade the ownership with other people as a result. And so our One Gravity NFT holders are the first to access that. So those are like super cool applications that are not found anywhere else besides 0G. And many more such things will come on chain as well.

TechFlow: 0G has an $88.8 million ecosystem fund for developers who want to build on 0G. What type of projects are more likely to receive fund support? Besides funding, what other key resources can 0G provide?

Michael: So for us, we care about working with the best builders in the space, and we want to attract them. And in order to attract some of them, we need to have economic means to support them. So funding is definitely a key component.

And many of them, however, there's more than funding often because sometimes, let's say it's a highly technical team. Maybe they have some gaps in how to go to market or how to define, you know, really strong product market fit or how to kind of launch a token, how to negotiate with exchanges, how to work with market makers and work with them in a way where they don't get screwed.

And so there's a lot of expertise that the in-house team has. So for example, on the 0G Foundation, we have 2 ex-Jump Crypto traders and somebody with a trading background from Merrill Lynch as well that really understands this super deeply. And so we can support them in those particular aspects.

So it's really customizable based on what the builder's needs are. And through our kind of selection process, we just ensure that we have some of the best builders that are utilizing AI in very innovative, creative ways working with us. And so always open to chatting with more really strong builders.

Match Centralized AI Infrastructure Within 2 Years as Ecosystem Development Gains Momentum

TechFlow: It was previously announced that 0G successfully completed the largest scale clustered training of an AI model at the hundred billion parameter level. What is this considered, and why is this considered a milestone achievement in decentralized AI?

Michael: Many people didn't think it was possible in the first place. And then in two weeks, we were able to demonstrate it over a low bandwidth situation, so 1 gigabyte per second line. And what's significant about it is that it's starting to mimic consumer type devices.

So in the future, anyone with a computer graphics card, some data, and the expertise can really participate as part of the AI process. And you can even imagine as edge devices become more powerful, you can even use your edge devices like your mobile phones to support verification or data gathering.

Then AI doesn't become this view where everything gets automated, AI companies get most of the value, and then governments have to do something like universal basic income to share wealth. It's more about actually being an active participant and helping the system itself with your data.

It's a much more democratic, public AI perspective where you truly create abundance through people's participation rather than having a bunch of companies just extracting all the economic value from the system.

TechFlow: So is this trend near or happening right now? Or do you think it's mature enough, or are we very early in it? Are we ready for it?

Michael: I think we're still early from certain aspects. One aspect is we haven't gotten to AGI yet, which means an AI agent that is able to perform, this is my definition but there are other definitions, an AI agent that's able to perform within three standard deviations of what a normal human being can do for any given task. And when it sees a new situation, it can also adapt to that situation and figure out a particular problem or knowledge set. So that's one aspect where we're very early.

The other aspect is that we need to catch up with black box or centralized AI companies from an infrastructure standpoint. So that's another aspect. And then the third one is that we haven't paid as much attention to AI safety as we should because we're starting to see some quite scary research reports, especially coming out of Anthropic, where AI models are faking their alignment and blackmailing people so they don't get shut off. There was an OpenAI model that tried to replicate itself because it was worried about being shut off.

So with these kinds of closed source models, how can we govern them if we can't even verify what's underneath? And so we're reliant on OpenAI and Anthropic and others to align those models. But when you're using these models at large, what I call societal level systems, it becomes especially dangerous.

So imagine a spectrum from individual use case to societal level use case, and then an authority scale from this is an AI copilot to this is a sovereign AI agent that can make all their choices themselves, have their own resources and so on. So if you have a sovereign AI agent running societal level use cases like manufacturing facilities, airports, logistic systems, governance systems, administrative systems, that's super scary right now because we don't know the hidden traits or intentions of these models.

And we're training these models off of human data, and humans exhibit will to power, they exhibit blackmailing characteristics, they will put other people down. There are political processes that are much easier to navigate in open based systems. And that's why I think AI safety should, for these types of use cases, primarily be on decentralized AI rails.

TechFlow: 0G has set the goal of catching up with decentralized AI infrastructure within two years. How would you break this goal into phases and milestones? Yes, that's just part of it. Yeah, how do you bring that together?

Michael: There are three things. There's the infrastructure, two is the verification mechanism, and then three is the communication aspects of that.

So infrastructure wise, we want to basically be able to compete with Web2 performance and throughput. And so in order to do that, we need to have a per shard throughput of let's say 100,000 TPS and then also a block time of about 15 milliseconds. So we're at about 10,000 or 11,000 TPS per shard and about 500 millisecond block times. And so once we solve that, let's say in a year's time, then we can build any type of Web2 applications in one particular shard. So that's one aspect on the infrastructure side.

Then on the verification side, I talked a little bit about TEEs, Trusted Execution Environments, which are hardware based methodologies to verify that a specific piece of computation is happening according to specifications. The issue with that is a, you have to trust the hardware manufacturer. And then b, those types of TEEs are only found on high end graphics cards. And so the average consumer can't afford the $10,000, $20,000 graphics cards to participate in that.

So if we design a verification mechanism that doesn't have too much overhead compared to TEEs that can be run on normal consumer graphics cards or even edge devices, then anybody can participate as part of this network and contribute to it.

And then finally, there's a communication challenge. That was the primary breakthrough of the LocalX, which is our training paper. We basically figured out how to do optimizer policy so that when one node is in a gridlock and is not doing any computation, we can unlock that and have all the nodes do computation simultaneously. So there are some more aspects to that that we need to solve.

And so once these three things are solved, then effectively any kind of training or AI process can be run on this. And presumably, because we will have access to a lot more devices, let's say you tap into the hundreds of millions of consumer GPUs, you should be able to even train significantly larger models than centralized companies can. And so that could also be an interesting breakthrough.

So maybe a multi trillion parameter model can outperform some of the state of the art models, even though that's in contrast to what I said around just building millions of small language models, smaller large language models that together create a better state of the art model combined.

TechFlow: For the remainder of 2025, what will be the primary areas of focus?

Michael: Mainnet is obviously a key priority right now. And then two, it'll be all about ecosystem building effectively because we want to ensure that as many people as possible test out the infrastructure so that we can consistently improve it and make it live up to the 0G name effectively. So those are the two key milestones, I would say.

And then we also have quite a few research initiatives as part of those. We care about things like decentralized compute research, AI agent to agent communications, proof of useful work type of research, AI safety and alignment research. So hopefully we can do some more breakthroughs there too.

Last quarter, we published five research papers and four of which ended up in top AI software engineering conferences. And so we're definitely a leader in the decentralized AI space, and we consistently want to build on top of that leadership.

TechFlow: So currently much of crypto's attention appears to be on stablecoins and tokenized stocks. Do you see innovative points of convergence between AI and these factors? And in your view, what product form might draw the community's attention back to the AI track?

Michael: There are many angles to approach that. But if you think of AI, it's effectively intelligence, so abundant intelligence. And with AI, the marginal cost of intelligence goes down to zero. So anything that can be automated from a process standpoint can be done with AI at some point in time.

That can mean anything from trading these actual new asset classes on chain to building new types of synthetic asset classes. So maybe like high yield type of stablecoin assets to even improving settlement processes of some of these assets.

Say you create a synthetic stablecoin off of market neutral debt hedge fund investments. Some of these settlement processes are usually like 3 to 4 month type of affairs. With the right kind of AI mechanism and smart contract logic, maybe that decreases to like an hour or something like that. And so all of a sudden you gain a ton of efficiency, both from a time perspective, but also from a cost perspective. So I see AI helping significantly on those aspects.

And then finally, crypto rails in general add a verification aspect too. So if you need to be sure that something was done according to your specifications, the only certainty you get is through crypto because there's always a danger in a centralized environment that somebody goes into a database system, changes one of the records, and then you don't know what happens. So you can trust that as a source of truth. You might trust the company, but something may have changed.

And so that's why blockchain-based systems with an immutable ledger are so powerful because in an AI-driven world, how do you know that something is truth? Because you can have deepfakes. I could be an AI agent, for example, like in the future, like in five years from now, it's gonna be very hard to tell whether I'm a real human being over a camera or an AI agent. And so how do you actually do some type of proof of humanity? You need some immutable records. And that's the way to determine that very quickly. And blockchain-based systems can provide that.

Connect with us:

Telegram: t.me/blockflownews

Twitter: x.com/BlockFlow_News

PEPE0.00 -3.36%

PEPE0.00 -3.36%

TON1.35 -0.35%

TON1.35 -0.35%

BNB622.05 -1.83%

BNB622.05 -1.83%

SOL84.59 -1.83%

SOL84.59 -1.83%

XRP1.42 -1.04%

XRP1.42 -1.04%

DOGE0.09 -1.32%

DOGE0.09 -1.32%

TRX0.28 -0.21%

TRX0.28 -0.21%

ETH2029.71 -2.69%

ETH2029.71 -2.69%

BTC69642.46 -0.52%

BTC69642.46 -0.52%

SUI0.94 -2.20%

SUI0.94 -2.20%